Last Updated: October 24, 2025

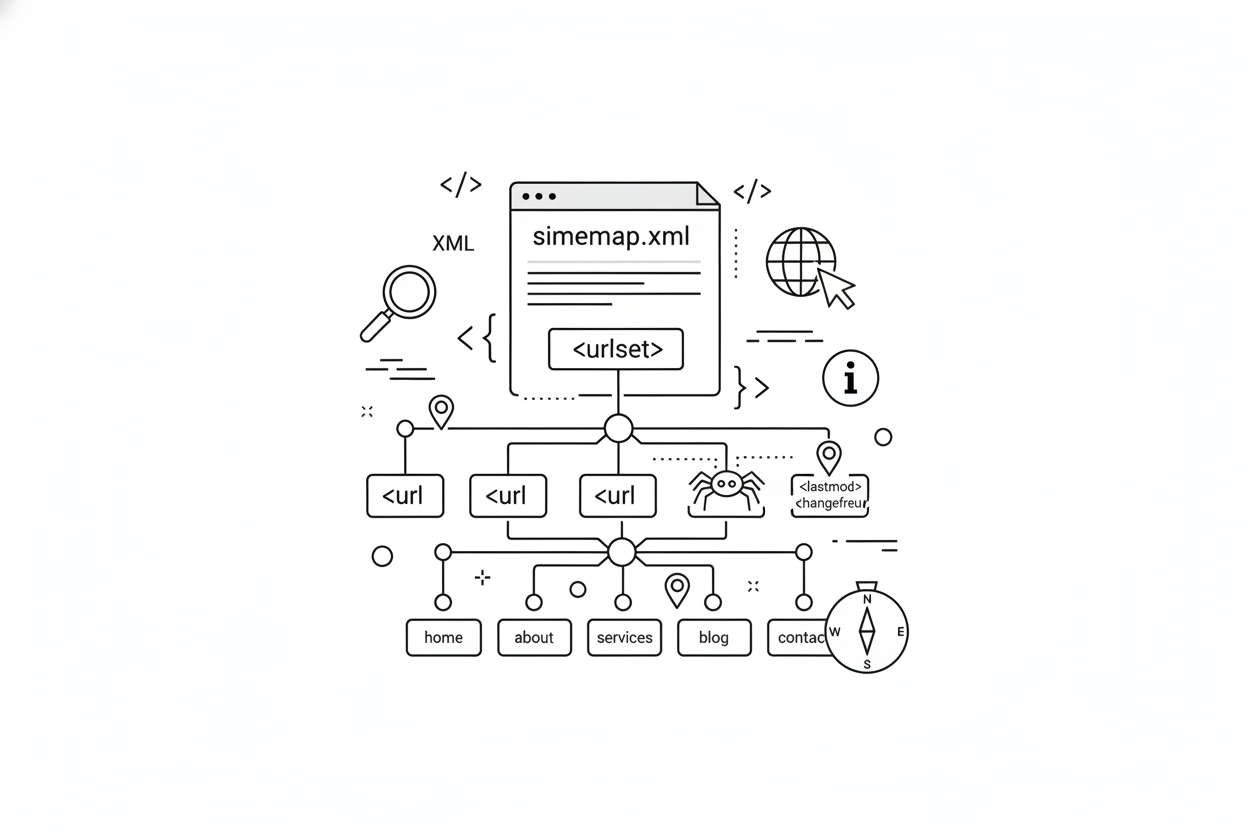

While users navigate your site through menus and links, search engines have another powerful tool for discovery: the XML sitemap. Think of it as a detailed blueprint or table of contents you provide directly to crawlers like Googlebot, explicitly listing the important URLs you want them to find and index.

For small websites, a sitemap might seem like a minor technical detail. However, for large, complex sites with thousands or millions of pages – especially those generated via Programmatic SEO – a well-optimized XML sitemap is absolutely critical for ensuring efficient content discovery and maximizing your crawl budget.

This guide moves beyond basic sitemap generation to provide a strategic framework for creating, managing, and optimizing XML sitemaps to accelerate indexing and improve your overall technical SEO health, forming a crucial part of your site architecture.

Chapter 1: What is an XML Sitemap (And What It Isn't)

An XML sitemap is a file (usually sitemap.xml located at your domain root) that lists URLs on your site, along with optional metadata about each URL:

<loc>(Required): The full, absolute URL of the page.<lastmod>(Optional but Recommended): The date the file was last modified. Crucial for signaling freshness to Google.<changefreq>(Optional, Often Ignored): How frequently the page is likely to change (e.g.,daily,weekly). Google often ignores this and determines crawl frequency based on other signals.<priority>(Optional, Largely Ignored): Indicates the priority of this URL relative to others on your site (0.0 to 1.0). Google largely ignores this value.

What a Sitemap Isn't:

- It's NOT a guarantee of indexing: Submitting a URL in a sitemap doesn't force Google to index it. Content quality, internal linking, and

noindextags still determine indexability. - It's NOT a primary ranking factor: Having a sitemap doesn't directly boost your rankings. Its benefit is indirect, through improved crawlability and faster discovery.

- It's NOT a substitute for good site architecture: Sitemaps help crawlers find pages, but strong internal linking is still essential for distributing PageRank and showing relationships between pages.

Chapter 2: Why Sitemaps Are Crucial for Large & PSEO Sites

While Google can find pages by following links, sitemaps become essential when:

- Your Site is Large: For sites with tens of thousands of pages, ensuring Googlebot discovers every single URL just through internal links can be challenging. Sitemaps provide a direct list.

- You Have New or Orphaned Content: A new section of your site, or pages with few internal links pointing to them, might take a long time for Googlebot to find organically. Sitemaps accelerate this discovery.

- Your Site Uses Rich Media: Sitemaps can include specific information about video or image content, helping Google understand and index these elements better.

- Programmatic SEO: When you generate thousands of pages programmatically, a dynamic sitemap that automatically includes these new URLs is the only reliable way to inform Google about their existence quickly.

Chapter 3: XML Sitemap Best Practices (The SOP)

Creating a basic sitemap is easy. Creating an optimized one requires attention to detail.

1. Include Only Indexable, Canonical URLs

This is the most critical rule. Your sitemap should only list URLs that:

- Return a

200 OKstatus code. - Are canonical (i.e., not duplicates pointing elsewhere via

rel="canonical"). - Are indexable (i.e., not blocked by

robots.txtor anoindextag). - Why? Including non-indexable URLs sends conflicting signals to Google and wastes their time processing URLs they ultimately can't use. It reflects poor site health.

2. Use Dynamic Generation (Essential for PSEO)

For any site that isn't purely static, your sitemap should be generated automatically by your CMS or backend system whenever content is added, updated, or removed. Relying on manual updates or infrequent generation means Google won't discover your new content promptly.

3. Keep Sitemaps Under the Size Limits

A single sitemap file has limits: 50,000 URLs and 50MB uncompressed.

- The Solution: Sitemap Index Files: If your site exceeds these limits, create a sitemap index file. This is a simple XML file that lists the locations of other sitemap files (e.g.,

sitemap-products-1.xml,sitemap-products-2.xml,sitemap-blog.xml). You then submit the URL of the index file to Google Search Console.

4. Utilize the <lastmod> Tag Accurately

The <lastmod> tag is your most powerful tool for signaling content freshness.

- Be Honest: Only update the

<lastmod>date when the page's meaningful content has actually changed. Artificially updating it without real changes can erode Google's trust in your sitemap. - Use the Correct Format: Use the W3C Datetime format (YYYY-MM-DD).

5. Submit Your Sitemap(s) to Google Search Console

Don't just create the file; tell Google where it is.

- Go to Google Search Console > Sitemaps.

- Enter the URL of your sitemap file (e.g.,

/sitemap.xml) or your sitemap index file (e.g.,/sitemap_index.xml). - Monitor the report for any errors Google encounters while processing it.

6. Reference Your Sitemap in robots.txt

Add a line to your robots.txt file pointing to your sitemap location. This helps other search engines and bots discover it.

Chapter 4: Common Sitemap Mistakes to Avoid

- Including Non-Canonical URLs: Wastes crawl budget and sends mixed signals.

- Including

noindexURLs: Contradictory instructions for Google. - Including URLs Blocked by

robots.txt: Google can't crawl them anyway. - Using Incorrect URL Formats: URLs must be absolute (including

https://) and properly encoded. - Infrequent Updates: Stale sitemaps delay the discovery of new content.

- Compression Errors: Ensure your sitemap is correctly encoded (UTF-8) and optionally gzipped (

.gz) properly.

Expert Insight for PSEO (Dynamic Sitemaps &

<lastmod>):

"For PSEO, the combination of dynamic sitemap generation and accurate<lastmod>tags is non-negotiable. When your system generates 1,000 new pages or updates prices on 10,000 existing ones, your sitemap index and relevant sub-sitemaps must update automatically, reflecting the precise<lastmod>timestamp. This is the fastest signal you can send to Google saying, 'Hey, come re-crawl these specific URLs; they've changed!' Correctly using<lastmod>helps Google prioritize its crawl resources, directly benefiting your crawl budget and indexation speed."

Conclusion: Your Site's Table of Contents for Crawlers

While not a magic bullet for rankings, a well-optimized XML sitemap is a fundamental tool in your technical SEO arsenal, especially for large and dynamic websites. It ensures efficient content discovery, helps signal freshness, and provides a direct communication channel with search engines.

By following these best practices – focusing on clean, indexable URLs, dynamic generation, accurate metadata, and proper submission – you create a reliable roadmap that helps Google navigate and understand your site architecture effectively.