For a 50-page blog, "crawl budget" is an academic curiosity. For a 500,000-page e-commerce giant or a rapidly expanding Programmatic SEO (PSEO) site, it is the entire game. It is the fundamental, non-negotiable bottleneck that determines the speed and success of your entire search strategy.

If your valuable pages aren't being crawled, they cannot be indexed. If they aren't indexed, they cannot rank. It's that simple.

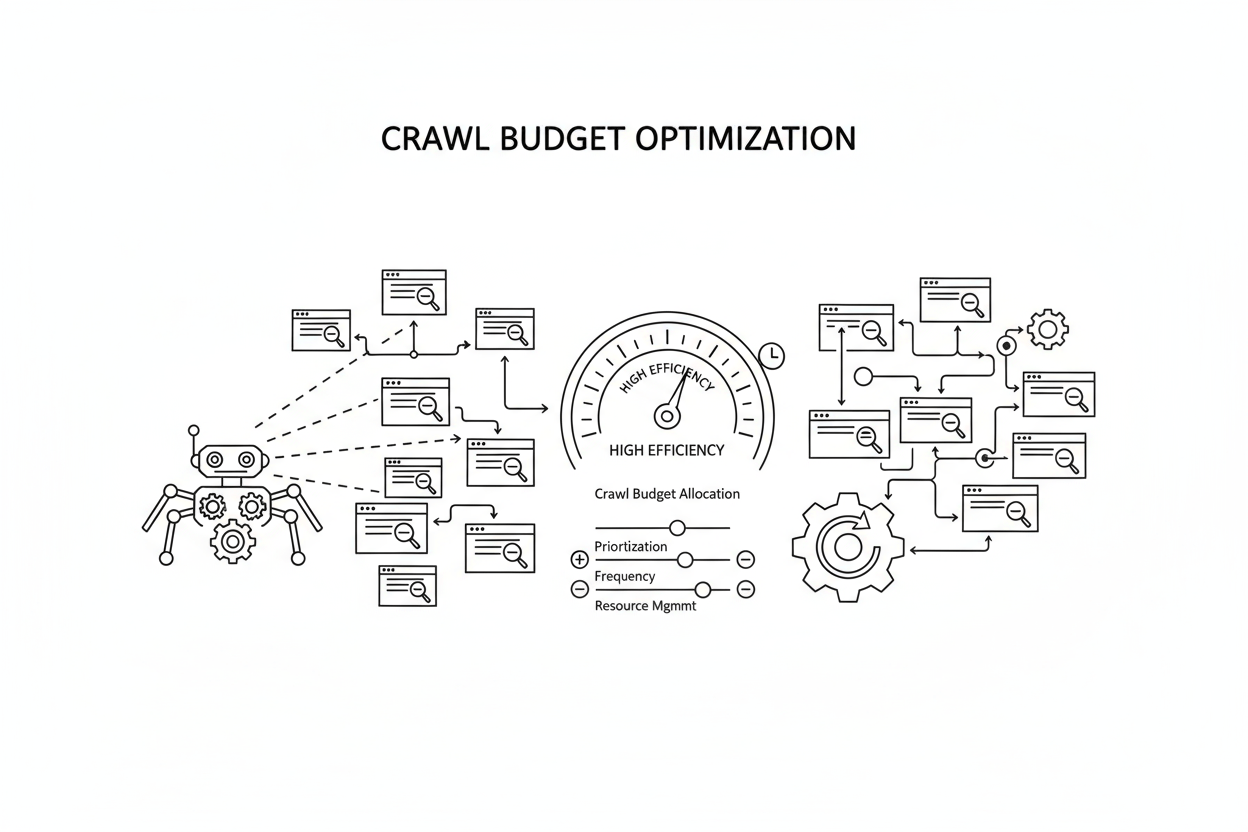

Welcome to the definitive guide on Crawl Budget Optimization. We are moving beyond basic definitions to provide an expert framework for diagnosing, controlling, and maximizing the efficiency of every visit Googlebot makes to your site. This is the technical foundation upon which all large-scale SEO success is built.

Chapter 1: What is Crawl Budget? (The Official Definition)

The most common mistake is thinking of crawl budget as a fixed number. It is not.

Google's own documentation, in their official guide on the topic, clarifies that crawl budget is a dynamic concept made of two key components:

- Crawl Rate Limit (or "Crawl Health"): This is how much Google can crawl. Googlebot is designed to be a good citizen; it will not crawl your site so aggressively that it crashes your server. It dynamically adjusts its crawl rate based on your server's health. If your server response time (TTFB) is fast and you return 200 (OK) status codes, Googlebot will increase its rate. If your server slows down or returns a high number of 5xx (server errors), it will immediately slow down.

- Crawl Demand (or "Crawl Schedule"): This is how much Google wants to crawl. This is determined by two factors:Popularity: URLs that are more popular on the internet (i.e., have more high-quality backlinks) are seen as more important and will be crawled more often.Staleness: Google's systems try to prevent URLs from becoming "stale." Content that is known to update frequently (like a news homepage) will be crawled more often than a static "About Us" page.

Crawl Budget Optimization, therefore, is the practice of maximizing both your Crawl Rate Limit (by having a fast, healthy server) and your Crawl Demand (by building authority and managing freshness signals), while simultaneously ensuring that every crawl request is spent on your most valuable, indexable URLs.

Chapter 2: The Critical Link: Crawl Budget & Programmatic SEO

The entire premise of Programmatic SEOis the creation of thousands, or even millions, of high-value pages at scale. But as we detail in our complete PSEO guide, this strategy has a critical vulnerability.

Let's imagine you launch a PSEO project with 100,000 new, valuable pages.

- Your server health is average, so Google allocates 5,000 crawls per day.

- However, due to a poor internal linking structure and unmanaged URL parameters, 4,000 of those crawls (80%) are wasted on low-value, duplicate, or non-indexable pages (e.g., ?sort=price_asc, ?filter=color_blue, etc.).

- This leaves only 1,000 "effective" crawls per day for your real content.

- Result: It would take 100 days for Google to discover all your new pages, by which time your competitors have already captured the market.

Crawl budget is the gatekeeper of your PSEO strategy's ROI. Your goal is to eliminate "Crawl Waste" and ensure that 90%+ of Googlebot's time is spent on pages that can actually earn you money.

Chapter 3: How to Diagnose Your Crawl Budget (The 2-Level Approach)

Before you can optimize, you must diagnose.

Level 1: The Quick-Check (Google Search Console)

The "Crawl Stats" report in GSC (under Settings) is your first stop. It is a simplified, aggregated view of Googlebot's activity. Pay close attention to:

- Total Crawl Requests: Is this number trending up or down?

- By Crawl Purpose: Are you seeing excessive "Discovery" crawls (meaning Google is struggling to find your content) or healthy "Refresh" crawls?

- By File Type: Is Google wasting time crawling CSS or JS files that should be cached?

- By Host Status: A high number of 5xx (server errors) or 404 (not found) responses is a major red flag that is actively reducing your crawl rate.

Level 2: The Professional Deep-Dive (Log File Analysis)

The GSC report tells you what happened, but it doesn't tell you where. The only way to get a 100%, granular view of every single URL Googlebot visited is through log file analysis.

This is the single most powerful technical SEO task. Analyzing your server logs allows you to see:

- Which specific low-value URLs Googlebot is hitting thousands of times a day.

- How much budget is being wasted on 404 pages.

- How often Googlebot is crawling your most important vs. unimportant pages.

- The exact "Crawl Efficiency Score" of your site.

Chapter 4: The 3 Pillars of Crawl Budget Optimization

Optimizing your budget is a three-pronged attack:

Pillar 1: Stop Crawl Waste (Controlling the Crawler)

This is the most important step. You must actively tell Googlebot where not to go. This is how you conserve your budget for your high-value PSEO pages.

Your primary tools for this are Robots.txt and Meta Tags.

- Use robots.txt to block entire sections of your site that provide zero SEO value. This includes user profile pages, shopping cart/checkout processes, and any URL parameter that creates duplicate content.

- Use rel="canonical" to consolidate signals for pages with similar content.

- Use noindex meta tag on pages you want Google to crawl but not include in the index (e.g., thin thank-you pages or internal search results).

Pillar 2: Fix Crawl "Traps" & Infinite Loops

Crawl traps are technical black holes that can consume your entire budget. The most common culprit, especially on PSEO and e-commerce sites, is faceted navigation (i.e., filters).

When Googlebot can combine filters (?color=red&size=large&brand=x), it can create millions or even billions of unique, low-value URLs. You must have a strategy (using robots.txt, nofollow, or AJAX) to prevent this.

Other common issues include:

- Broken Internal Links (404s): Fix them. Every crawl on a 404 is 100% wasted.

- Long Redirect Chains: Each redirect in a chain (e.g., Page A -> Page B -> Page C) wastes a crawl. Fix all chains to point directly to the final destination (Page A -> Page C).

Pillar 3: Increase Crawl Rate & Demand (Speed & Authority)

Once you have plugged the leaks, you can focus on expanding the pipe.

- Improve Server Response Time (TTFB): This is the most direct way to improve your Crawl Rate Limit. A faster server (under 300ms) signals to Google that you can handle more crawls.

- Build High-Quality Backlinks: This is the most direct way to improve Crawl Demand. Links from authoritative sites signal "popularity" and tell Google your content is important and deserves to be crawled more frequently.

- Optimize Sitemaps: Use dynamic XML sitemaps that update automatically. Use the tag to accurately signal when your PSEO pages are updated, encouraging Google to re-crawl them.

Chapter 5: A Framework for Measuring Success: Crawl Efficiency

Expert Insight from seopage.ai: *"Stop guessing. Start measuring. We use a metric called Crawl Efficiency.Crawl Efficiency = (Valid, Indexable 200-Status URLs Crawled / Total URLs Crawled) * 100A log file analysis might show Googlebot made 100,000 requests in a day. But if 70,000 of those were for parameterized URLs, 404s, or redirected pages, your Crawl Efficiency is only 30%.For our PSEO clients, our primary goal is to get this number above 90%. This single metric is the #1 KPI for technical PSEO health."*

Conclusion: Crawl Budget is the Enabler of Scale

Crawl budget optimization is the unseen, highly technical foundation of all successful large-scale websites. By moving from a passive observer to an active manager of Googlebot's behavior, you are building a significant competitive advantage.

When you master your crawl budget, you are ensuring that every new page you create has the maximum possible chance of being indexed, ranked, and driving revenue.