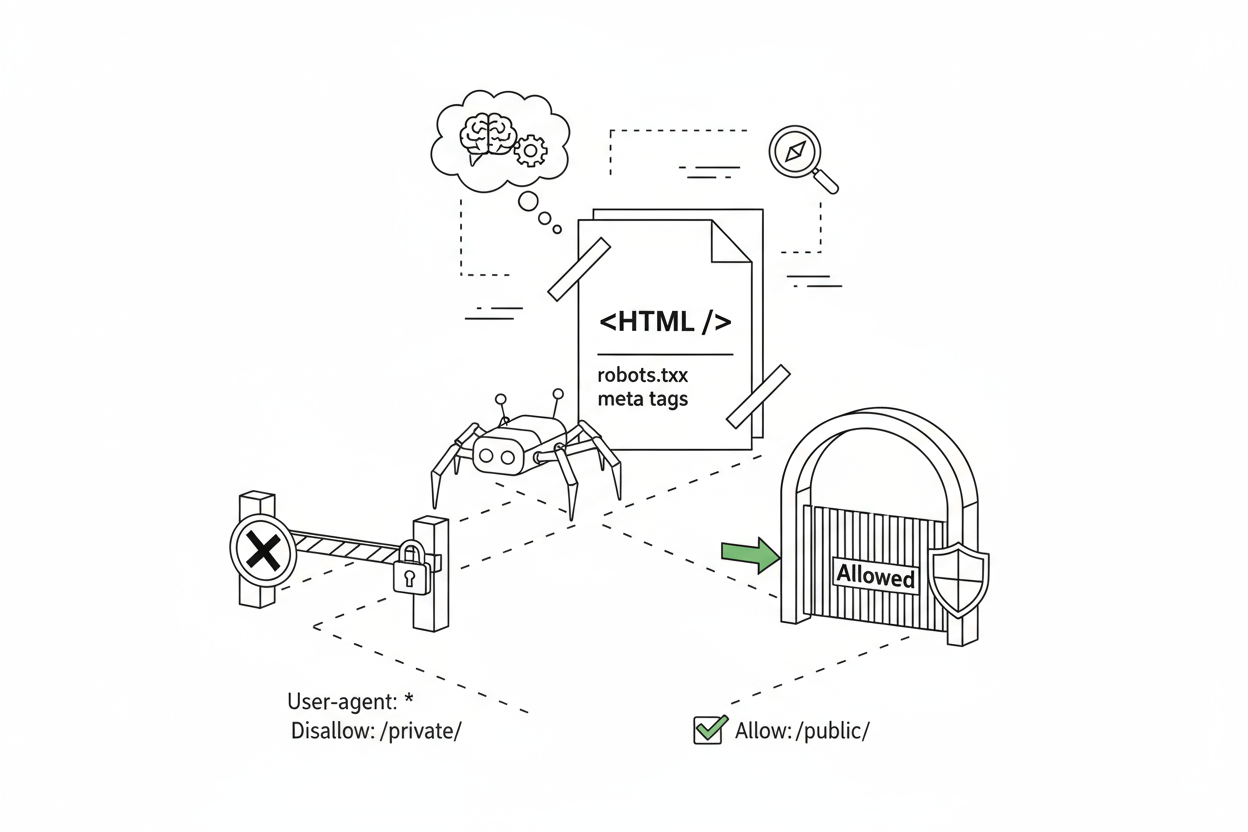

If your server log files are the diagnostic report of your crawl budget, then your robots.txt file and meta tags are the control panel. These are the primary, most powerful tools you have to actively command search engine crawlers, telling them where they can go, what they can see, and what they are allowed to index.

For a large-scale Programmatic SEO site, mastering these tools is not optional. It is the fundamental difference between an efficient, indexed site and a chaotic, crawl-wasting black hole.

This guide moves beyond basic definitions to provide a strategic framework for using these tools to conserve crawl budget and ensure Googlebot’s time is spent only on your most valuable pages.

Chapter 1: The Critical Concept: Disallow (Block Crawling) vs. noindex (Block Indexing)

This is the most misunderstood concept in all of technical SEO, and getting it wrong is disastrous for crawl budget.

| Feature | Disallow (in robots.txt) | noindex (in Meta Tag / X-Robots-Tag) |

| What it Does: | Stops Crawling. | Stops Indexing. |

| How it Works: | A command in a text file before Googlebot visits a page. | A command in the HTML head after Googlebot has visited and loaded the page. |

| Crawl Budget: | Saves crawl budget (Googlebot never makes the request). | Wastes crawl budget (Googlebot must crawl the page to see the noindex tag). |

| The "Gotcha": | If a "disallowed" page gets external links, Google may still index it without crawling (showing the "Indexed though blocked by robots.txt" error in GSC). | The page is guaranteed to be removed from the index (eventually). |

Expert Insight: The "De-index & Block" Two-StepAs confirmed by Google's own developer documentation, you cannot Disallow a page and expect its noindex tag to be read. Googlebot must be allowed to crawl a page to see its noindex tag.The Correct Process:

- Step 1 (The noindex): Add the tag to the page. Crucially, ensure this page is NOT blocked in robots.txt.

- Step 2 (The Wait): Wait until the page has been de-indexed. You can verify this using the site: operator (e.g., site:yourdomain.com/your-page) or by checking GSC's "Pages" report.

- Step 3 (The Disallow): After it's gone from the index, add a Disallow rule in your robots.txt file to prevent Googlebot from ever wasting budget on it again.

Chapter 2: Mastering robots.txt for Crawl Budget Conservation

Your robots.txt file is your first line of defense. It's a simple text file, but it's the most powerful lever you have for saving crawl budget before it's spent. For the official specification, always refer to Google's documentation on robots.txt.

Basic Syntax

The file consists of rulesets:

- User-agent: Specifies which bot the rule applies to (e.g., Googlebot, * for all bots).

- Disallow: Tells the bot not to crawl the specified URL path.

- Allow: Explicitly permits crawling, which can override a broader Disallow rule.

Strategic Use Cases for PSEO & Large Sites

1. Blocking All Parameterized URLs (The #1 PSEO Killer)

This is the most important rule for any site with filters. It stops Googlebot from getting lost in crawl traps.

PlaintextUser-agent: Googlebot

# Blocks any URL containing a "?"

# This is a broad but highly effective rule for crawl traps.

Disallow: /*?*This single line can prevent millions of duplicate URLs from being crawled, which is a core solution for the problems found in managing faceted navigation.2. Blocking Low-Value Site SectionsYou must actively block sections that have no SEO value.PlaintextUser-agent: *

# Block administrative and user-specific areas

Disallow: /cart/

Disallow: /checkout/

Disallow: /my-account/

Disallow: /wp-admin/

# Block internal search results

Disallow: /search/Why let Googlebot crawl your shopping cart 10,000 times a day? This is pure crawl waste.3. Finding What to BlockHow do you know which parameters to block? This is where your log file analysis comes in. Your logs will show you the exact useless URLs that Googlebot is hitting most often. Use that data to build your robots.txt rules.

Chapter 3: The Meta Tag Toolkit: Your Page-Level Scalpels

If robots.txt is an axe, meta tags are scalpels. They provide page-by-page instructions after Googlebot has arrived. You can find all options in Google's official guide to robot meta tags.

noindex

The most important meta tag for index management. It tells Google, "You can crawl this page, but do not show it in search results."HTML

- Use Case: Thin content, "thank you" pages, test pages, or internal search results pages (if you can't block them via robots.txt).

nofollow

Tells Google not to pass PageRank through the links on this page and that it should not trust them for discovery.HTML

- Use Case: Often combined with noindex () on low-quality pages to prevent Google from discovering more low-quality links.

canonical vs. noindex

This is a common point of confusion.

- rel="canonical": This tag is for consolidation. It tells Google, "This page is a copy of another page. Please combine all authority signals (like links) and send them to the main version." It does not save crawl budget, as Google must crawl the page to see the tag.

- noindex: This tag is for removal. It tells Google, "This page should not exist in your index at all."

Use canonical for duplicates (e.g., ?sort=price points to the main category). Use noindex for pages you want to be completely erased from search.

Chapter 4: The Advanced Tool: X-Robots-Tag

What if you want to noindex a file that isn't an HTML page, like a PDF file or an image? You can't put a meta tag in a PDF.

The solution is the X-Robots-Tag. This is an HTTP header that your server sends before the file is sent. It's invisible to users but read by Googlebot. You can see the full implementation details in Google's official guide on X-Robots-Tag.

Example (in an .htaccess file for Apache servers):

Apache# Noindexes all PDF files on the site

Chapter 5: How to Verify Your Directives

Never "set it and forget it." Always verify your changes.

- Verify robots.txt: Before deploying, paste your rules into the Google Search Console robots.txt Tester. You can test specific URLs (like a parameterized URL) to ensure they are correctly "Blocked" while also testing your homepage to ensure it is "Allowed."

- Verify noindex & canonical: Use the URL Inspection Tool in Google Search Console. Enter a specific URL, and GSC will tell you:If the page is "Disallowed by robots.txt."If Google has seen a noindex tag ("Indexing not allowed: 'noindex' detected").What the "User-declared canonical" is and what "Google-selected canonical" is.

Conclusion: Active Management is Not Optional

On a large-scale or PSEO site, robots.txt and meta tags are living documents. Every log file analysis you run should inform a review of your crawler directives.By actively and strategically managing which parts of your site Google can crawl and index, you are channeling your finite crawl budget away from waste and directly towards your high-value pages. This active management is the core of a successful Crawl Budget Optimization strategy.